Blender 4.2: Compositor¶

Added¶

- Added an overlay to display each node's last execution time. The display of execution time is disabled by default, and can be enabled from the Overlays pop-over. The execution time statistics is gathered during compositor evaluation in the nodes editor. (467a132166)

- The Legacy Cryptomatte node is now supported in the Viewport Compositor. (2bd80f4b7a)

- A new Bloom mode was added to the Glare node. It produces similar results to the Fog Glow mode but is much faster to compute, has a greater highlights spread, and has a smoother falloff. In the future, the Fog Glow mode will be improved to be faster and more physically accurate. (f4f22b64eb)

Changed¶

- The Hue Correct node now evaluates the saturation and value curves at the original input hue, not the updated one resulted from the evaluation of the hue curve. (69920a0680)

- The Hue Correct node now wraps around, so discontinuities at the red hue will no longer be an issue. (8812be59a4)

- The Blur node now assume extended image boundaries, that is, it assumes pixels outside of the image boundary are the same color as their closest boundary pixels. While it previously ignored such pixels and adjusted blur weights accordingly. (c409f2f7c6)

- The Vector Blur node was rewritten to match EEVEE's motion blur. The Curved, Minimum, and Maximum options were removed but might be restored later. (b229d32086)

Improved Render Compositor¶

The render compositor has been rewritten to improve performance, often making it several times faster than before.

The compositor currently works on the CPU, but GPU acceleration is coming soon as well.

There are some changes in behavior. These were made so that the final render and 3D viewport compositor work consistently, and can run efficiently on the GPU. Manual adjustments might be necessary to get the same result as before.

This mainly affects canvas and single-value handling: the new implementation follows strict left-to-right node evaluation, including canvas detection. This makes relative transform behave differently in some cases, and makes it so single value inputs (such as RGB Input) can not be transformed as image.

Each of the following sections describe a difference in behavior that might affect the result of old compositing setups.

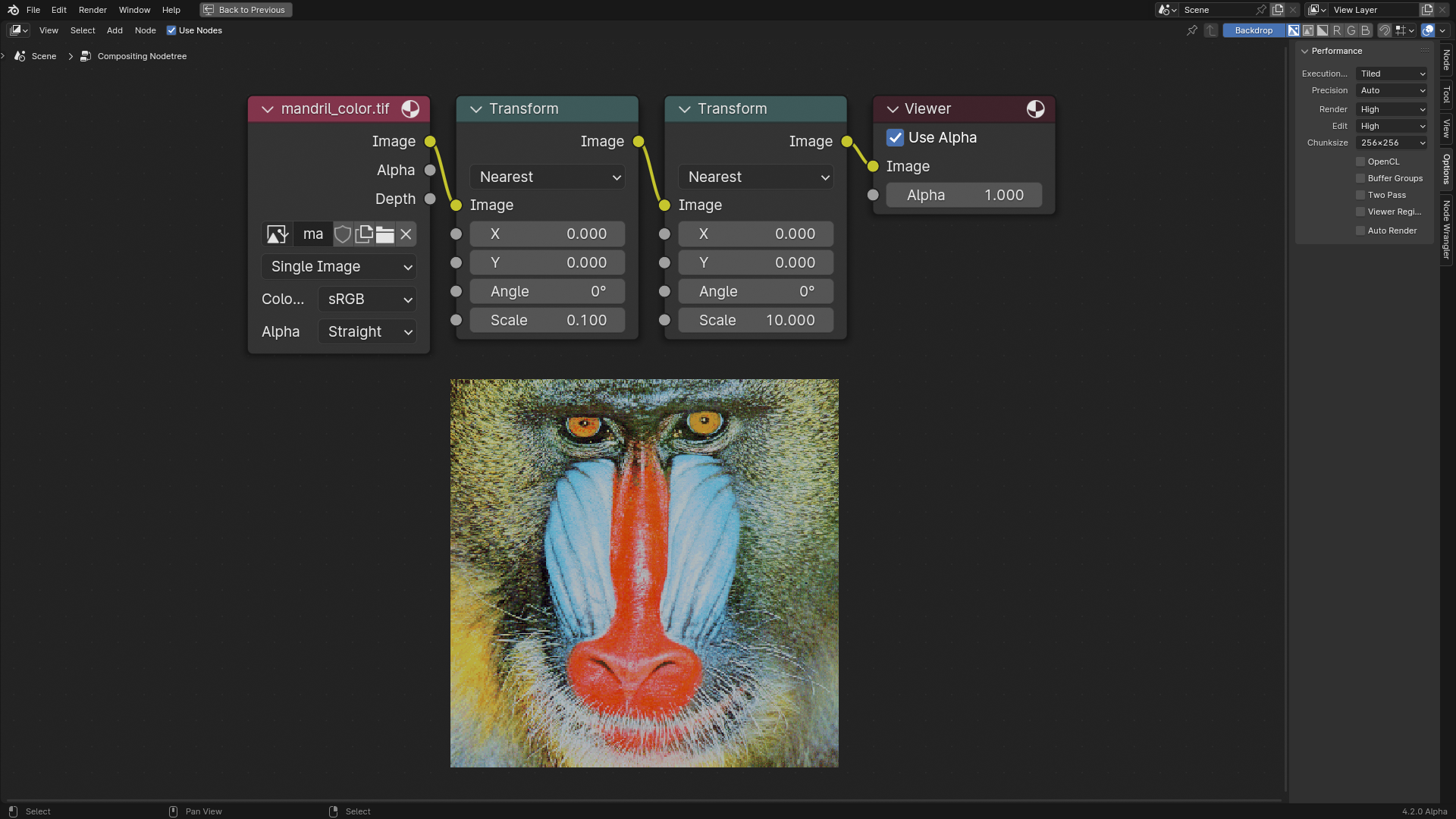

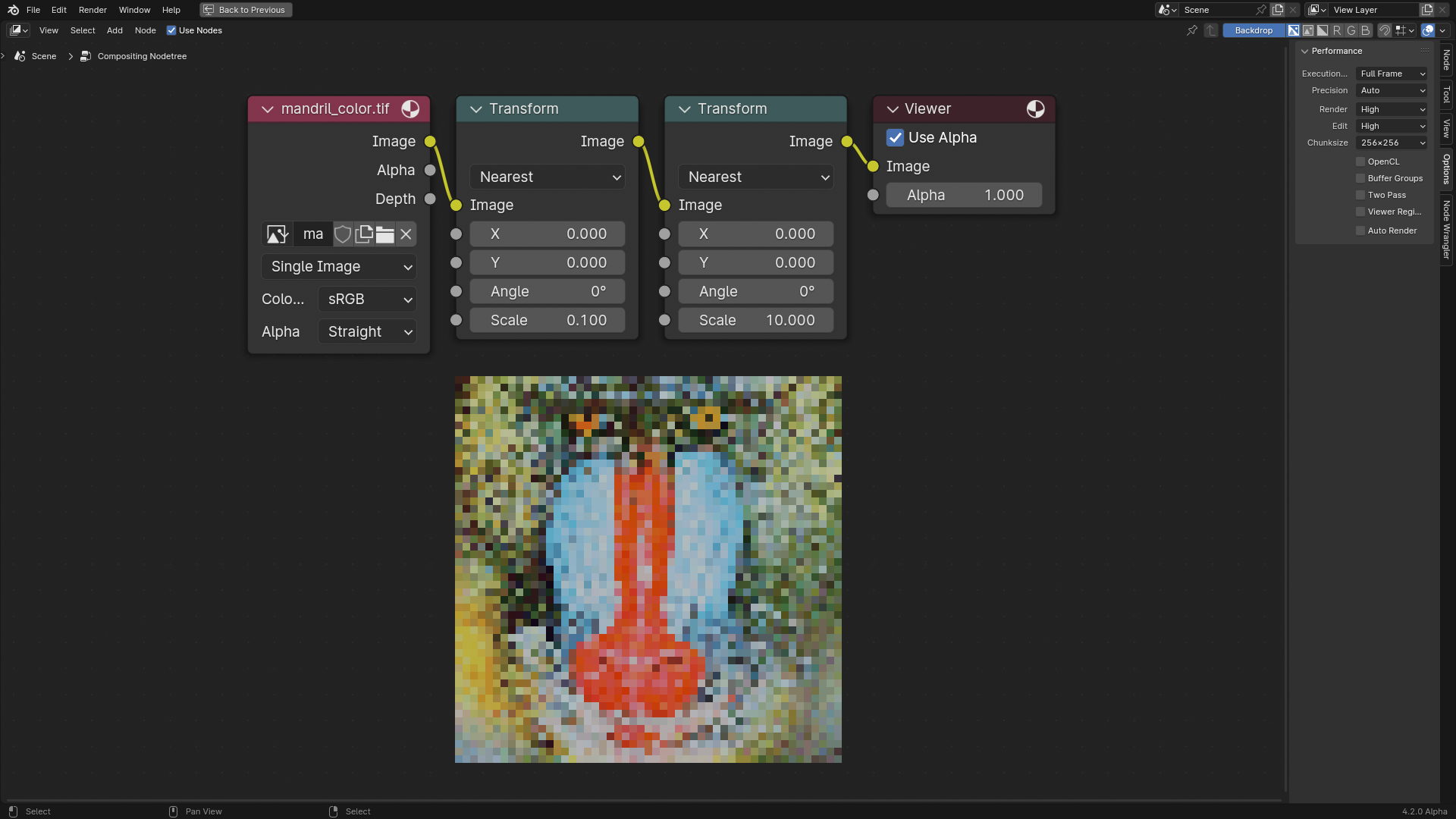

Transformations¶

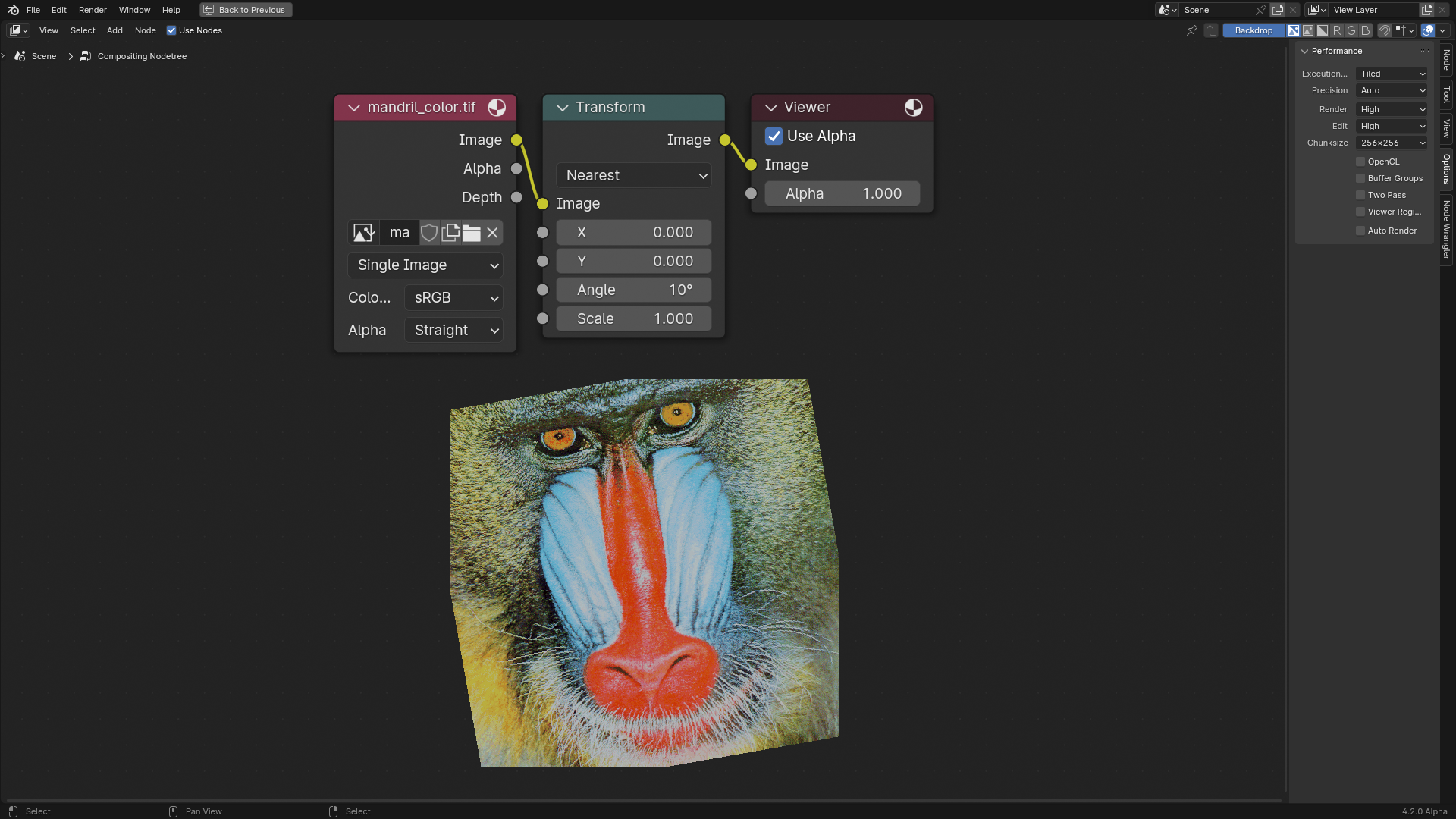

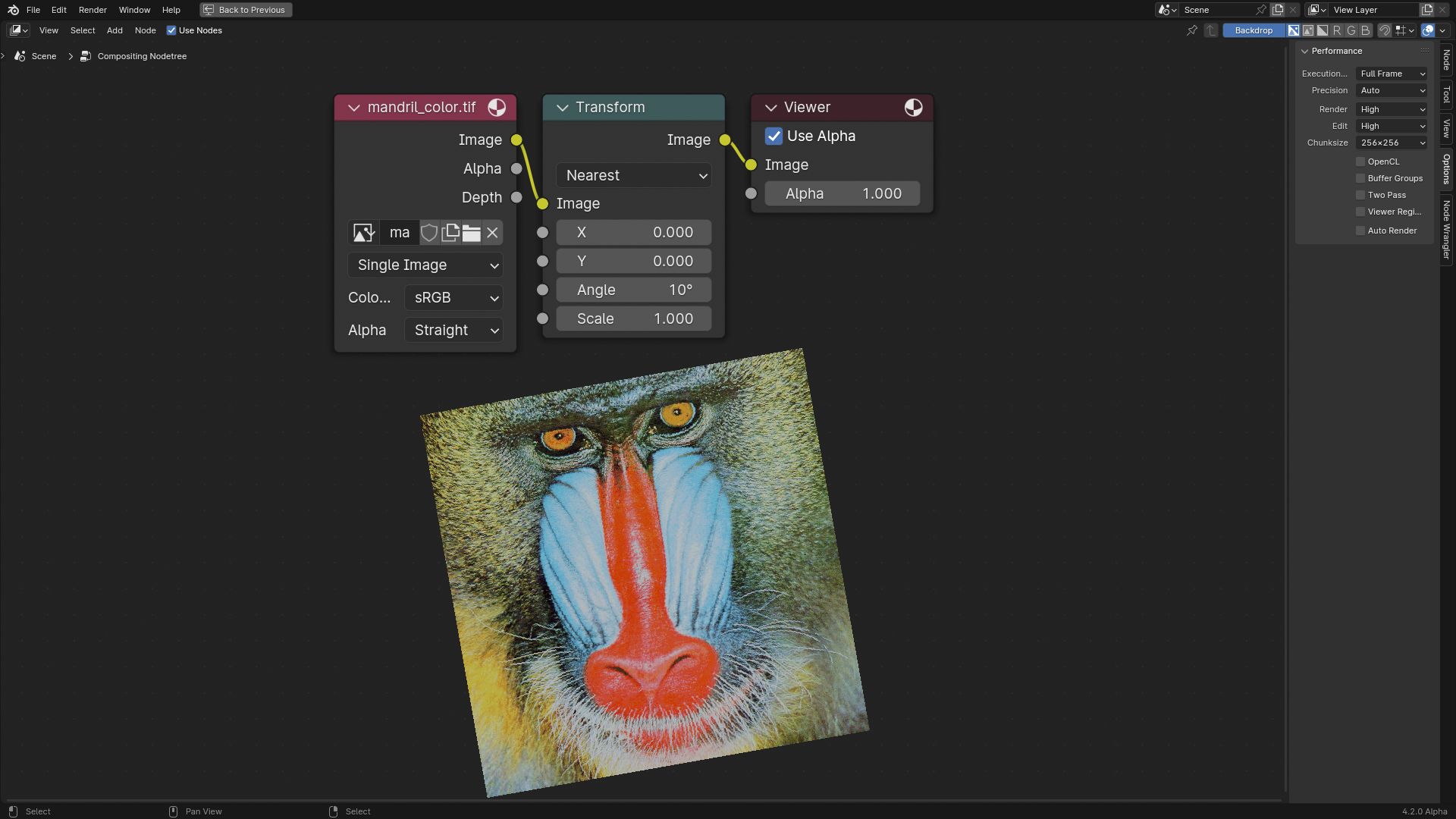

Transforms are now immediately applied at the transform nodes, meaning that scaling down an image will destructively reduce its resolution. In the old compositor, transformations were delayed until it was necessary to apply them. This is demonstrated in the following example, where an image is scaled down then scaled up again. Since the scaling was delayed in the old compositor, the image didn't loose any information, while in the new compositor, the image was pixelated.

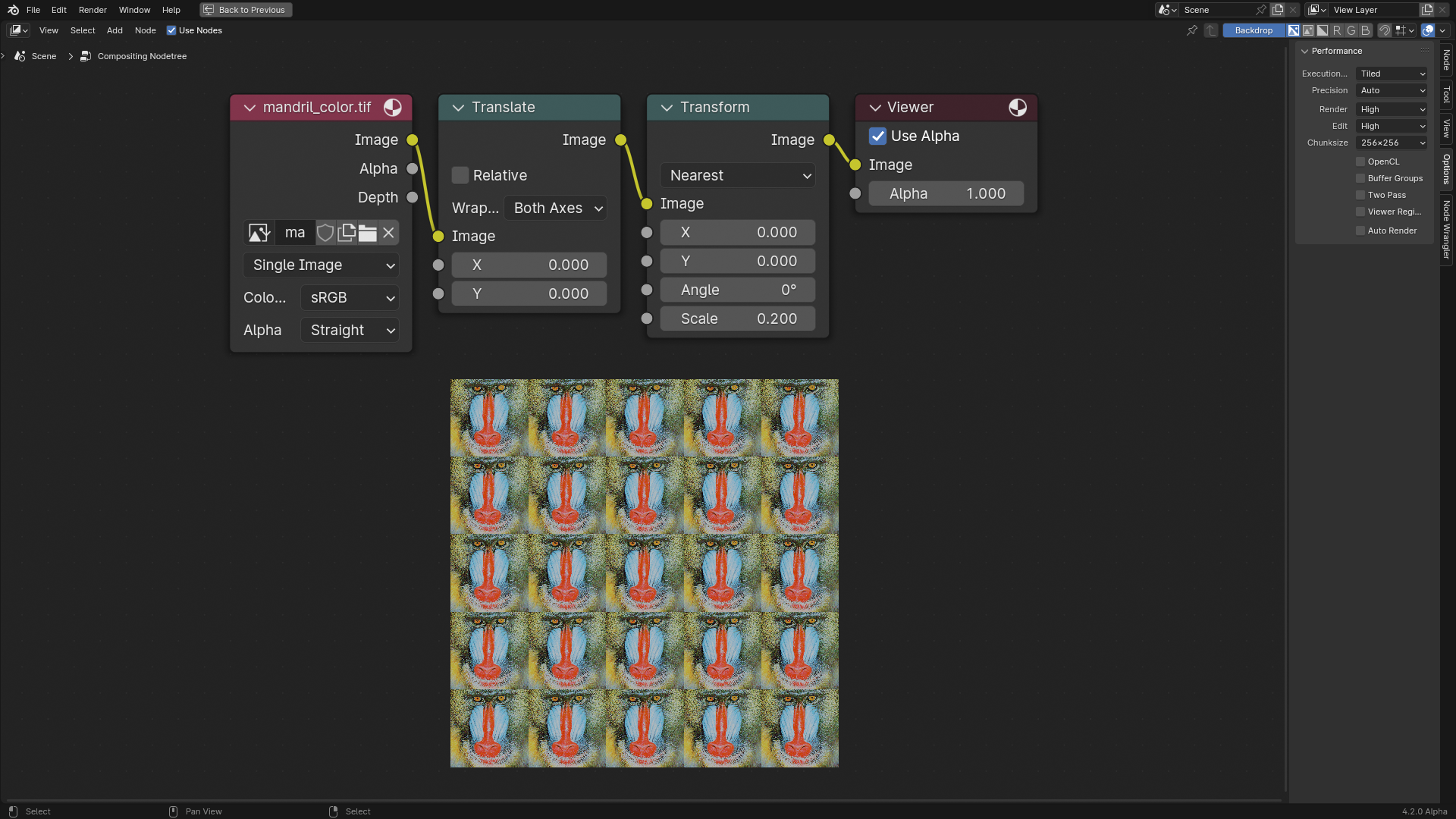

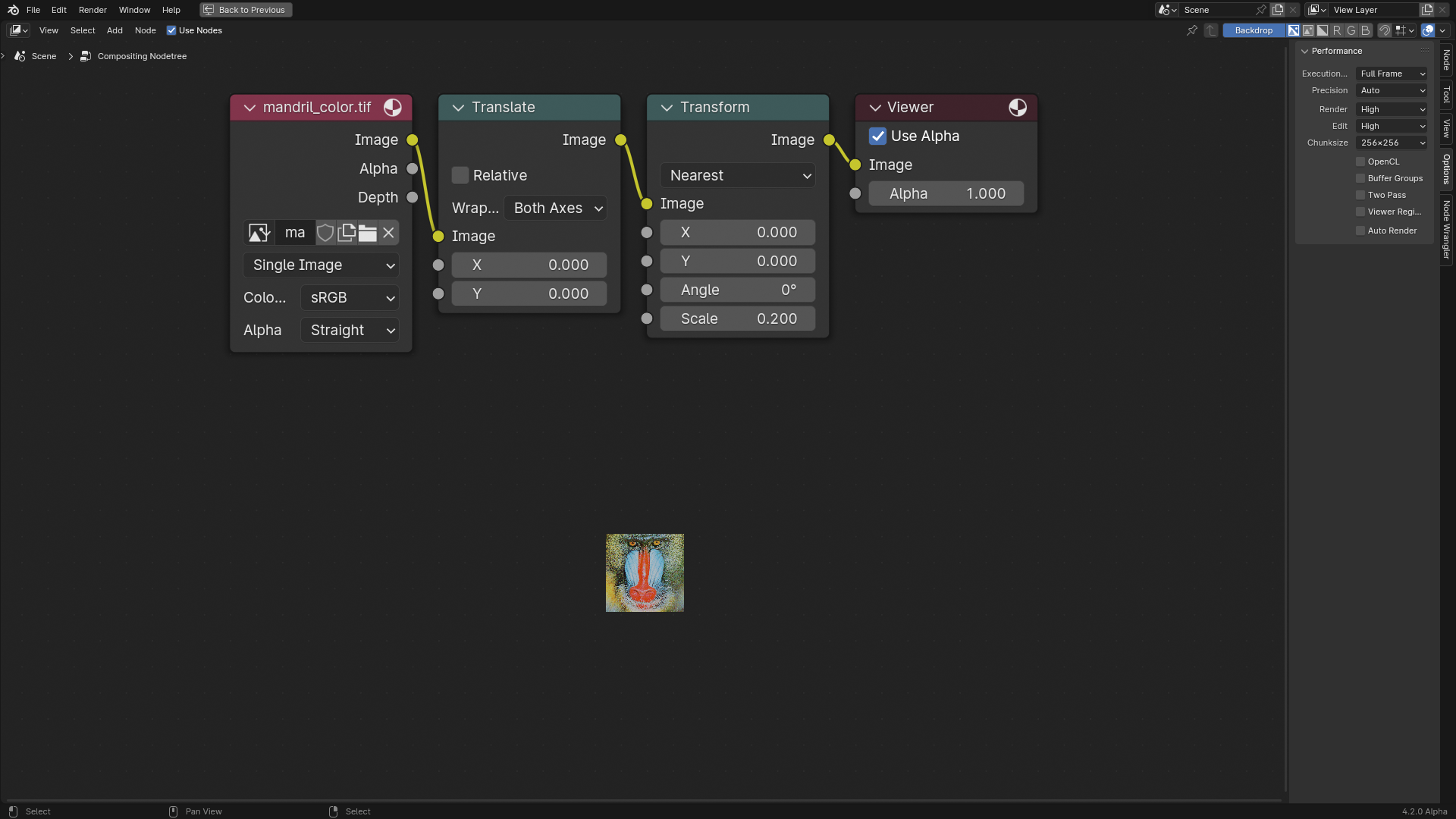

Repetition¶

The Wrapping option in the Translate node can no longer be used to repeat a pattern. It now solely operates as a clip on one side wrap on the other side manner. This is demonstrated in the following example, where an image gets Wrapping enabled then scaled down. For the old compositor, the wrapping was delayed until the scale down node, producing repetitions. While in the new compositor, wrapping only effects the translate node, which does nothing, so it is unaffected.

Note

This functionality will be restored in the future in a more explicit form.

Size Inference¶

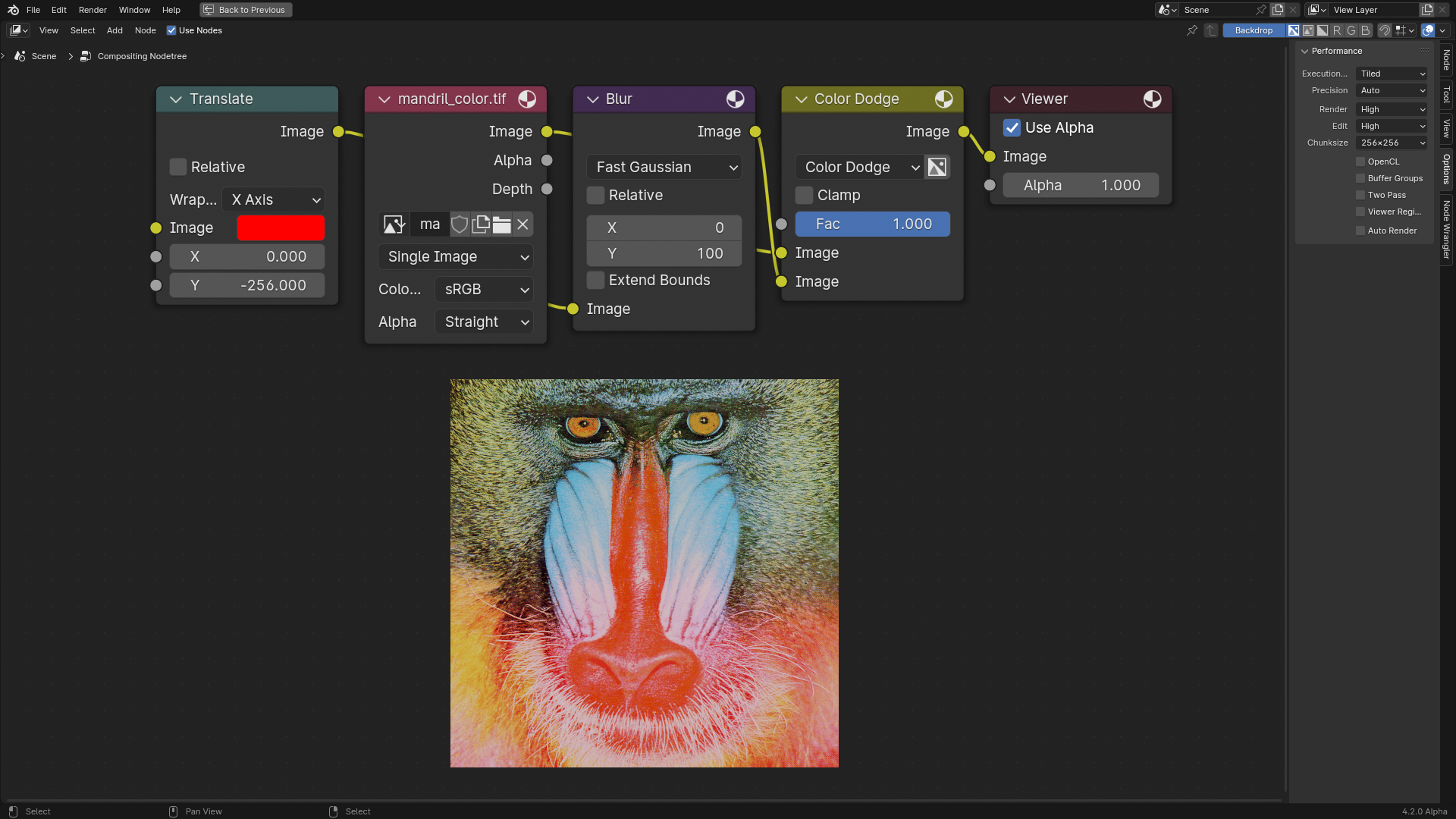

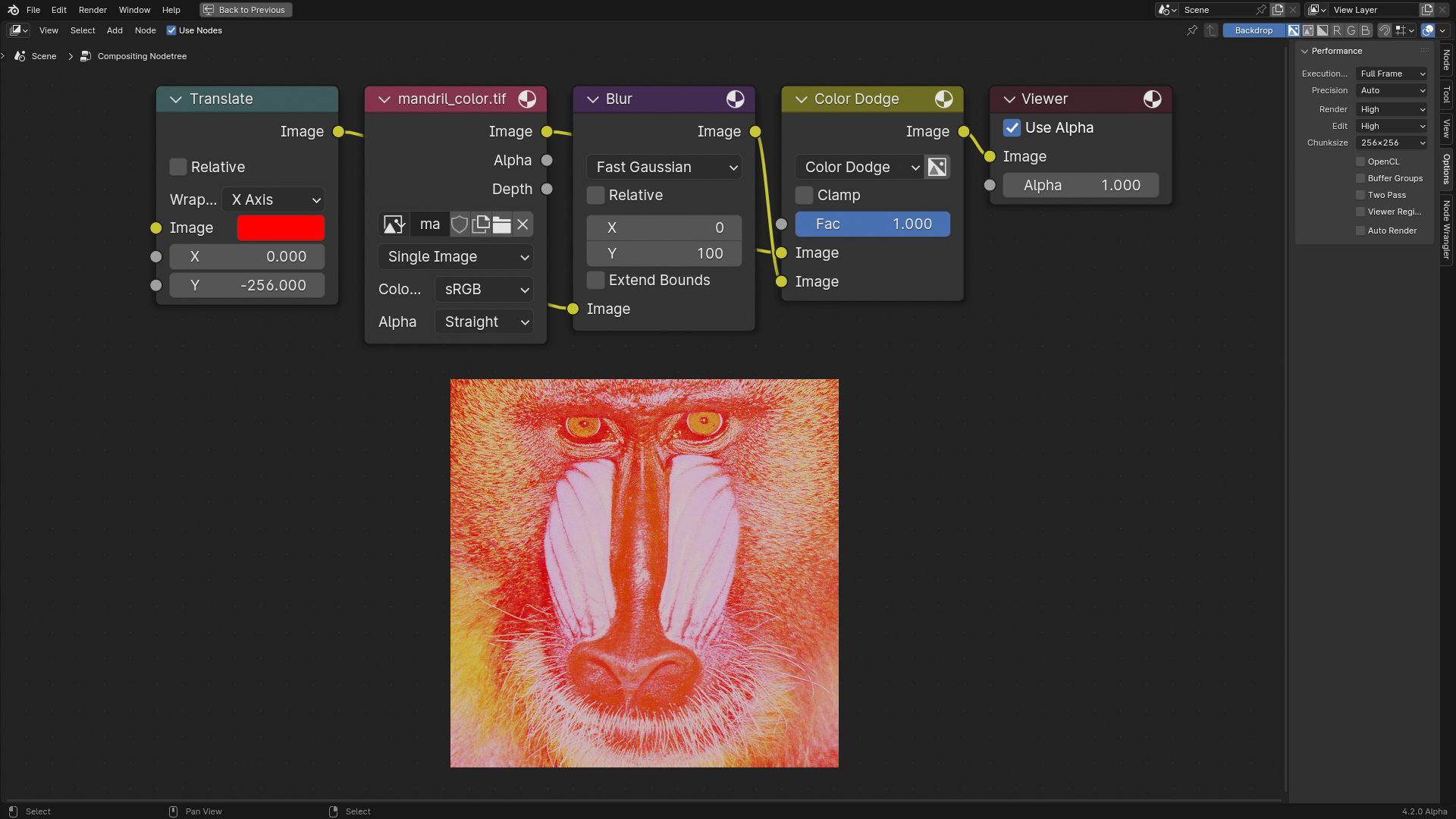

The old compositor tried to infer an image size from upstream/output nodes, while the new compositor evaluates the node tree from left to right without inferring image sizes from upstream nodes. The new behavior is more predictable but made some tricks that were previously possible to be no longer valid. This is demonstrated in the following example, where in the old compositor, the translate node inferred its size as the size of the mandrill image, so it is as if the node received a full image with the same size as the mandrill image filled with a red color, which when translated down would leave a black area at the top, which when blurred created a gradient.

The new compositor on the other hand treats the translate node as having a single red color as an input, which is transformation-less, similarly, the blur node also treated its input as a single red value, which was passed through without blurring, and finally, the color mix node when receiving a single red color, mixed it with the entire mandrill image as most nodes do.

Clipping¶

The old compositor will clip images if their inferred size is smaller than their actual size, while the new compositor will not do size inference and consequently will not clip images. This is demonstrated in the following example.

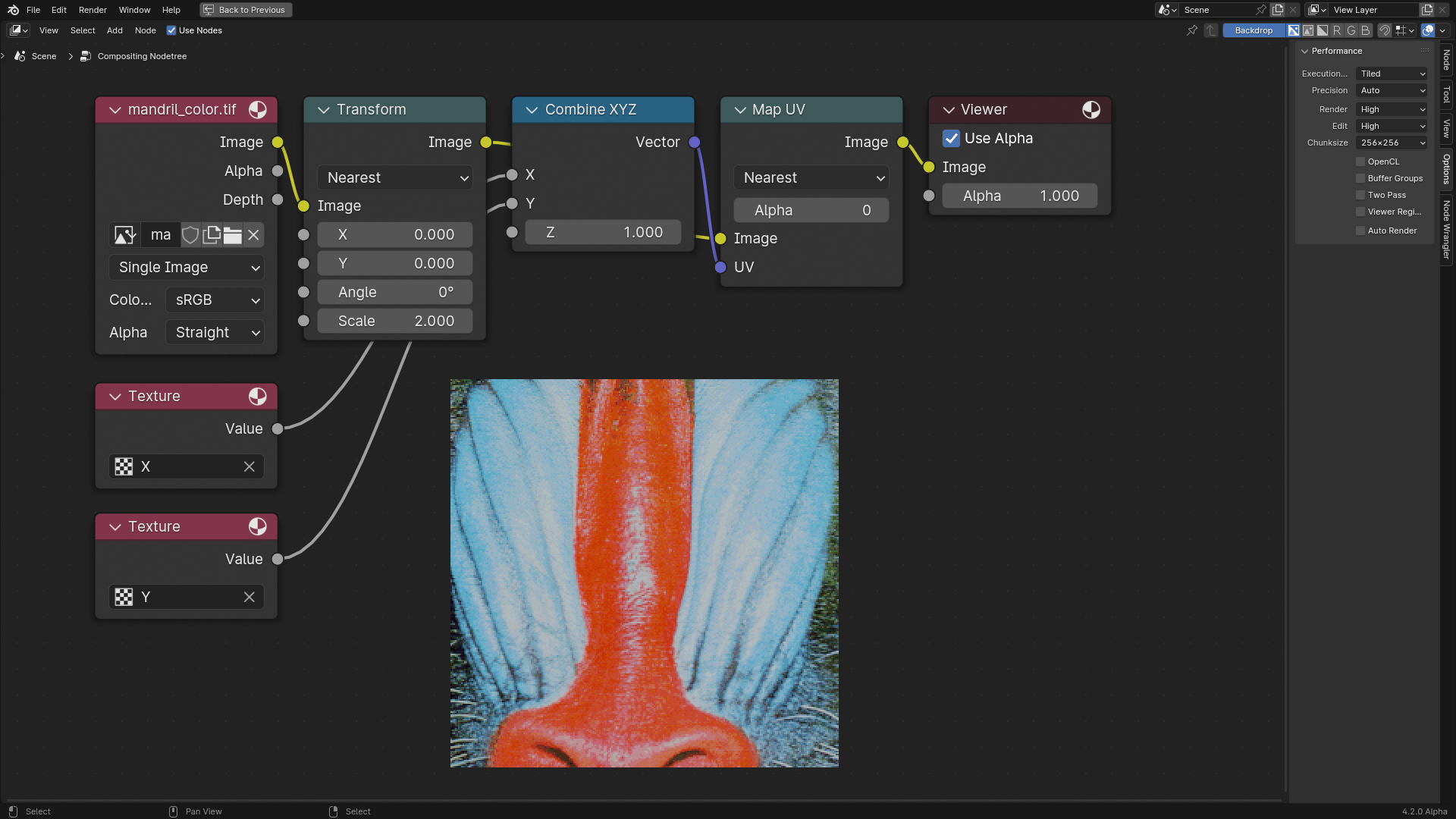

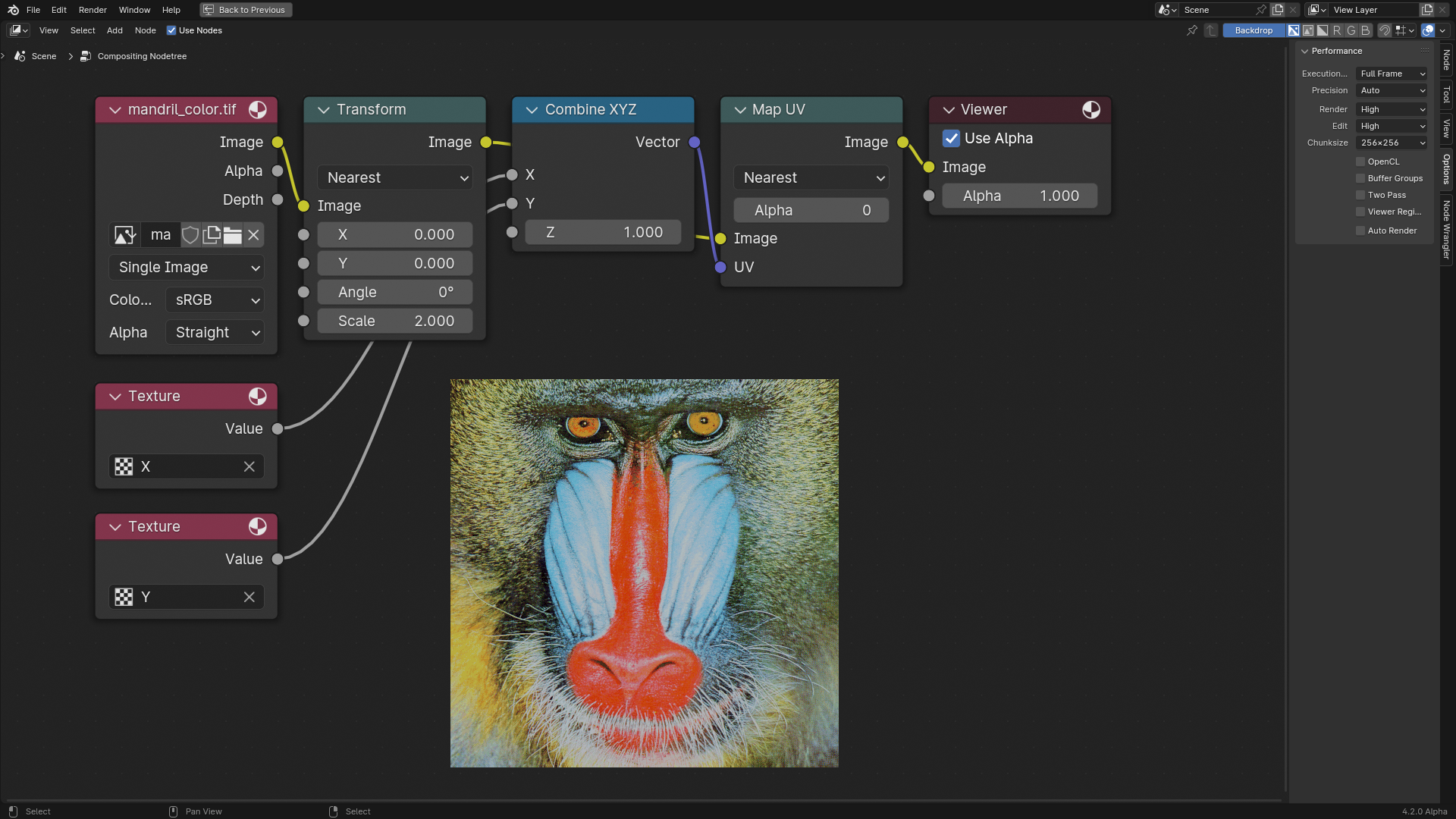

Sampling Space¶

The old compositor sampled images in their aforementioned inferred space, which can be potentially clipped if the inferred size is smaller than the actual image size as mentioned before. The new compositor samples images in their own space. This is demonstrated in the following example, where a scaled up image is sampled in its entirety by using normalized [0, 1] gradients as the sampling coordinates. The old compositor produced clipped mages since its inferred size is also clipped, while the new compositor produces full images.