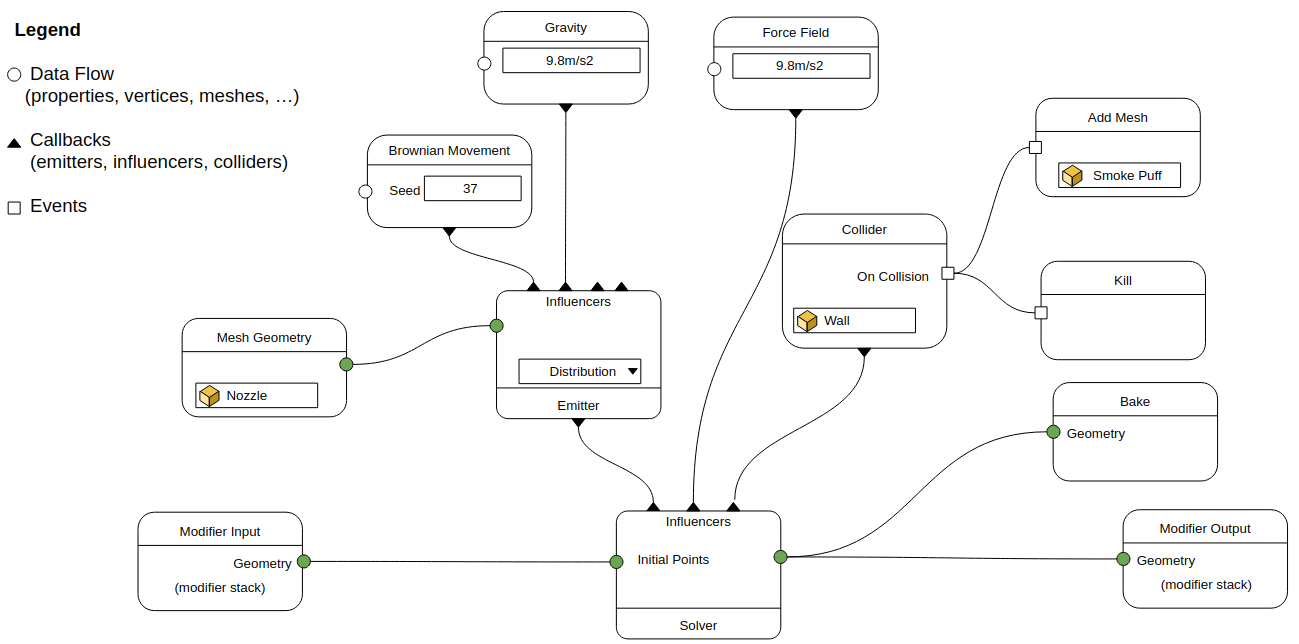

Event-Based Simulation Concept¶

Many simulations can be implemented with existing geometry nodes. However, a more complex setup could be considered in the future. The design in this section hasn't been validated since it was last discussed in 2020, however many sections may still apply. For events there is a need for a different representation for its callbacks.

Conversion vs Emission¶

- Conversion tries to convert from different data types close to 1:1

- Emission requires distribution functions + maps

Simulation Context¶

The simulation context is defined by the current view layer. If the simulation needs to be instanced in a different scene it has to be baked to preserve its original context.

Baking vs Caching¶

- Baking overrides the modifier evaluation (has no dependencies)

- Caching happens dynamically for playback performance

Interaction and physics¶

- Physics needs its own clock, detached from animation playback

- There is no concept of (absolute) “frames” inside a simulation

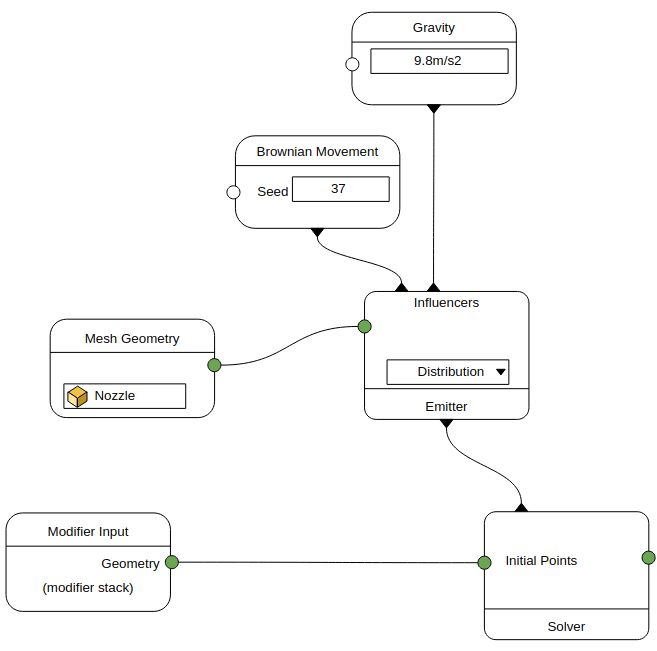

Solver Node¶

The solver node requires new kind of input, the influences.

The geometry is passed to the solver as the initial points (for the particles solver). The solver reaches out to its influences that can:

- Create or delete geometry

- Update settings (e.g., color)

- Execute operations on events such as on collision, on birth, on death.

In this example the callbacks are represented as vertical lines, while the geometry dataflow is horizontal.

Emitter Node¶

The emitter node generates geometry in the simulation. It can receive its own set of influences that will operate locally. For instance, an artist can setup gravity to only affect a sub-set of particles.